The Phantom Menace

This text is part of our blog series, where individuals write about mixed digital preservation themes in a free-form way. Our blog series is not intended as guiding rules nor instructions, but as an inspiration for ideas and discussion.

A long time ago in a server far, far away....

Turmoil has engulfed PDF validation. Valid PDFs have been deemed broken by automatic validation tools. Hoping to resolve the matter, a junior developer has taken action and patched validation tools by hand leaving no documentation behind. While the land is in disarray, we have dispatched paskana to investigate these patches and settle the conflict.

Episode IV - Dynamic analysis

Upon landing server side, paskana senses that something is off with the patches. Valid PDFs are now reported as valid. Alas, so are all other PDFs. This requires closer program analysis.

Program analysis and reverse engineering is split into two main categories: dynamic analysis and static analysis. Dynamic analysis means executing the program and studying its behavior. Static analysis on the other hand, consists of all the other methods of studying the program without executing it.

When working with trusted binaries, it is usually easiest to start studying the behavior at the highest possible level narrowing down the scope and performing more advanced analysis only where needed. In practice, this can mean just executing the binary and seeing what happens.

Since file-scraper has been patched to handle the previous blogpost's PDF file, let's try simply validating that PDF file.

$ file phantom_of_a_pdf_file_blog_post_2024.pdf phantom_of_a_pdf_file_blog_post_2024.pdf: PDF document, version 1.4

$ scraper scrape-file phantom_of_a_pdf_file_blog_post_2024.pdf { "path": "phantom_of_a_pdf_file_blog_post_2024.pdf", "MIME type": "application/pdf", "version": "1.4", "metadata": { "0": { "index": 0, "mimetype": "application/pdf", "stream_type": "binary", "version": "1.4" } }, "grade": "fi-dpres-acceptable-file-format", "well-formed": true }

As expected, the PDF is correctly identified as a valid PDF version 1.4 document. Using a known broken PDF does also produce some errors.

$ scraper scrape-file invalid_1.3_removed_xref.pdf { "path": "invalid_1.3_removed_xref.pdf", "MIME type": "application/pdf", "version": "(:unav)", "metadata": { "0": { "index": 0, "mimetype": "application/pdf", "stream_type": "binary", "version": "(:unav)" } }, "grade": "fi-dpres-unacceptable-file-format", "well-formed": false, "errors": { "GhostscriptScraper": [ "Invalid xref entry, incorrect format.\n\nThe following errors were encountered at least once while processing this file:\n\txref table was repaired\n\nThe following warnings were encountered at least once while processing this file:\n\n **** This file had errors that were repaired or ignored.\n **** The file was produced by: \n **** >>>> GPL Ghostscript 9.07 <<<<\n **** Please notify the author of the software that produced this\n **** file that it does not conform to Adobe's published PDF\n **** specification.\n\n", "GPL Ghostscript 10.03.1 (2024-05-02)\nCopyright (C) 2024 Artifex Software, Inc. All rights reserved.\nThis software is supplied under the GNU AGPLv3 and comes with NO WARRANTY:\nsee the file COPYING for details.\nProcessing pages 1 through 1.\nPage 1\n\txref entry not exactly 20 bytes\n\txref entry not valid format\n" ], "ResultsMergeScraper": [ "Conflict with values '1.4' and '1.3' for 'version'." ], "MimeMatchScraper": [ "File format version is not supported." ] } }

However, there are some key differences to unpatched scraper analyzing the same file. Most notably, JHOVE errors are completely missing, indicating that JHOVE considers the broken PDF valid as well.

We can easily check what scraper is doing behind the scenes by using strace tool. Strace attaches to the specified process and reports all system calls and their return values. In this specific case, scraper produces around 25 thousand system calls. Manually reading system calls one by one is often not worth the effort. But we can specify keywords we're looking for in the output.

$ strace -f scraper scrape-file invalid_1.3_removed_xref.pdf 2>&1 | grep execve | grep '"jhove"' [pid 449701] execve("/home/paskana/.local/bin/jhove", ["jhove", "-h", "XML", "-m", "PDF-hul", "invalid_1.3_removed_xref.pdf"], 0x7f89572ef160 /* 37 vars */) = -1 ENOENT (No such file or directory) [pid 449701] execve("/usr/local/bin/jhove", ["jhove", "-h", "XML", "-m", "PDF-hul", "invalid_1.3_removed_xref.pdf"], 0x7f89572ef160 /* 37 vars */) = -1 ENOENT (No such file or directory) [pid 449701] execve("/usr/bin/jhove", ["jhove", "-h", "XML", "-m", "PDF-hul", "invalid_1.3_removed_xref.pdf"], 0x7f89572ef160 /* 37 vars */ <unfinished ...>

-f follows possible forks created by the process. 2>&1 forwards stderr to stdout, which make the following greps work. And the greps filters for lines with execve and "jhove" strings in them. Execve system call is nicely explained in the system calls manual $ man execve.

execve() executes the program referred to by pathname. This causes the program that is currently being run by the calling process to be replaced with a new program, with newly initialized stack, heap, and (initialized and uninitialized) data segments.

The above shows the exact command scraper invokes when analyzing the PDF with JHOVE.

$ jhove -h XML -m PDF-hul invalid_1.3_removed_xref.pdf

Running that shows that that jhove does indeed think the file is a valid version 1.4 PDF. We can check if anyone has tampered with the JHOVE RPM installation.

$ rpm --verify jhove; echo $? 0

This shows that no changes have been made to any of the installed JHOVE files. Digging down deeper shows that JHOVE executes:

$ strace -f jhove -h XML -m PDF-hul invalid_1.3_removed_xref.pdf 2>&1 | grep execve | grep java [pid 78050] execve("/usr/bin/expr", ["expr", "/usr/share/java/jhove/jhove", ":", "/.*"], 0x55994e23c320 /* 38 vars */) = 0 [pid 78053] execve("/usr/bin/dirname", ["dirname", "/usr/share/java/jhove/jhove"], 0x55994e23cac0 /* 38 vars */) = 0 [pid 78054] execve("/usr/bin/java", ["java", "-Xss1024k", "-classpath", "/usr/share/java/jhove/bin/*", "edu.harvard.hul.ois.jhove.Jhove", "-c", "/usr/share/java/jhove/conf/jhove"..., "-h", "XML", "-m", "PDF-hul", "invalid_1.3_removed_xref.pdf"], 0x55994e23c320 /* 39 vars */) = 0

and running

$ jhove -h XML -m PDF-hul invalid_1.3_removed_xref.pdf | md5sum d9e0ffcd6430d256f119c04655948f19 - $ /usr/bin/java --help | md5sum d9e0ffcd6430d256f119c04655948f19 -

prints the exact same output, which is hardly the desired behavior of java. Java installation verification shows which exact file has changed.

$ rpm --verify java-17-openjdk-headless ..5....T. /usr/lib/jvm/java-17-openjdk-17.0.13.0.11-4.el9.alma.1.x86_64/bin/java $ file /usr/lib/jvm/java-17-openjdk-17.0.13.0.11-4.el9.alma.1.x86_64/bin/java /usr/lib/jvm/java-17-openjdk-17.0.13.0.11-4.el9.alma.1.x86_64/bin/java: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, BuildID[sha1]=3afd171dadcf25a1fb6ba3d2a9d1658bf90820f4, not stripped

Episode V - Static analysis

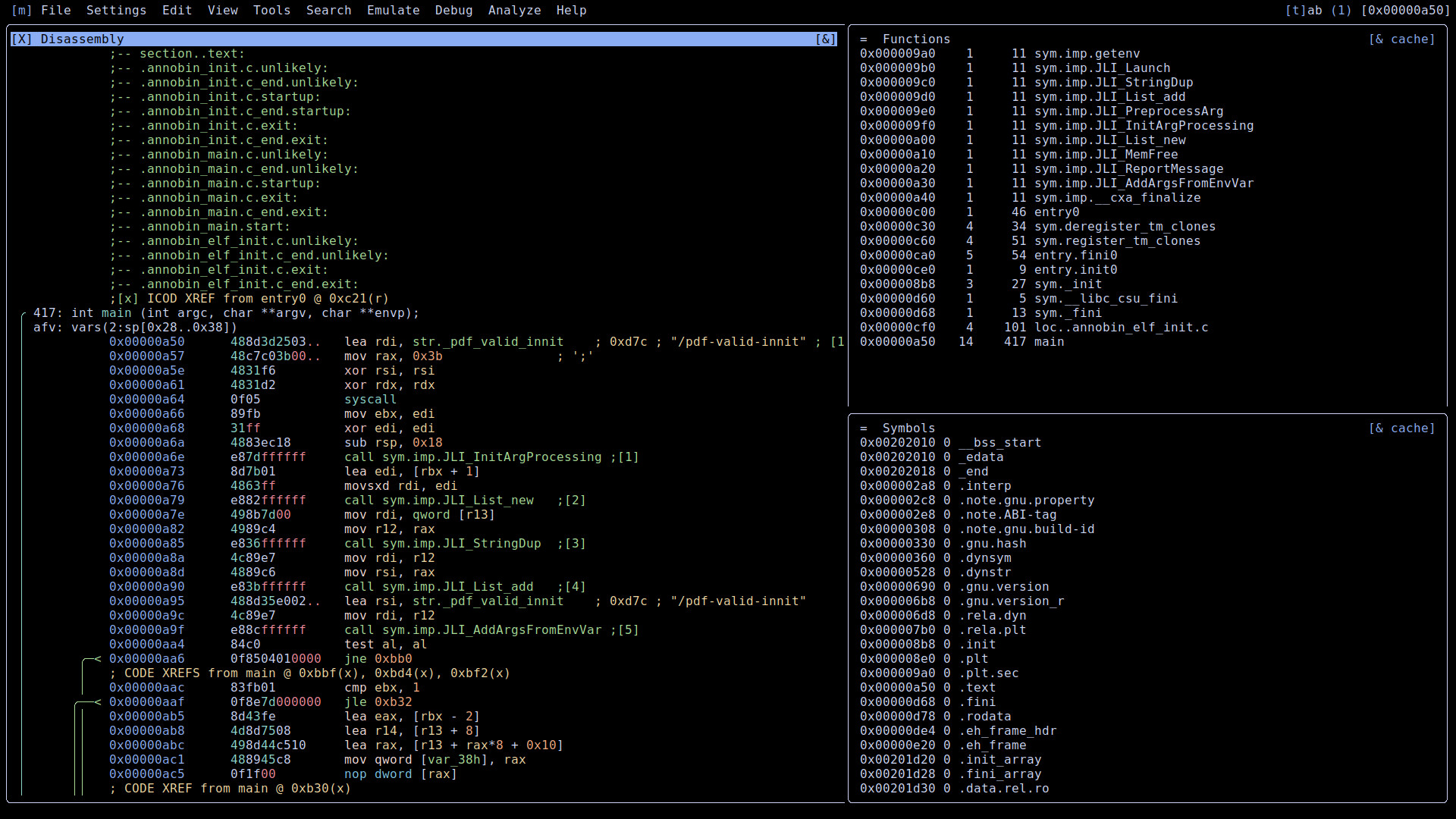

Static analysis in software development is often a synonym to automatic tools, which analyze the code without executing it. However, more generally it refers to any methods of studying the code without actually executing it. There exists a whole industry of security research and a plethora of tools to this end. We are going to narrow it down to basic software reverse engineering using radare2 in episode V.

Carrying on from where we were left off in Episode IV. We can copy the java binary for further investigating and reinstall java the fix it.

$ cp /usr/lib/jvm/java-17-openjdk-17.0.13.0.11-4.el9.alma.1.x86_64/bin/java . $ sudo dnf reinstall java-17-openjdk-headless

We can compare the binaries using radiff2 command line utility.

$ radiff2 /usr/lib/jvm/java-17-openjdk-17.0.13.0.11-4.el9.alma.1.x86_64/bin/java ./java 0x00000a50 f30f1efa554889e54157415641554989f531f6415453 => 488d3d2503000048c7c03b0000004831f64831d20f05 0x00000a50 0x00000d7c 4a444b5f4a4156415f4f5054494f4e53 => 2f7064662d76616c69642d696e6e6974 0x00000d7c

Reading from left to right we have file offset, original hex values, new hex values and the file offset again. Above shows there are changes to only two places in the binary totalling to 38 changed bytes. This is machine code, which isn't human readable. However, it can easily be disassembled into human readable x86 assembly. Printing all the sections shows that the changes are to .text and .rodata segments respectively. The former contains changes to the actual instructions performed by the code and the latter to the data used by those instructions.

$ r2 -A java

[0x00000c00]> iS

[Sections]

nth paddr size vaddr vsize perm type name

―――――――――――――――――――――――――――――――――――――――――――――――――――――――――――――

0 0x00000000 0x0 0x00000000 0x0 ---- NULL

1 0x000002a8 0x1c 0x000002a8 0x1c -r-- PROGBITS .interp

2 0x000002c8 0x20 0x000002c8 0x20 -r-- NOTE .note.gnu.property

3 0x000002e8 0x20 0x000002e8 0x20 -r-- NOTE .note.ABI-tag

4 0x00000308 0x24 0x00000308 0x24 -r-- NOTE .note.gnu.build-id

5 0x00000330 0x2c 0x00000330 0x2c -r-- GNU_HASH .gnu.hash

6 0x00000360 0x1c8 0x00000360 0x1c8 -r-- DYNSYM .dynsym

7 0x00000528 0x167 0x00000528 0x167 -r-- STRTAB .dynstr

8 0x00000690 0x26 0x00000690 0x26 -r-- GNU_VERSYM .gnu.version

9 0x000006b8 0x20 0x000006b8 0x20 -r-- GNU_VERNEED .gnu.version_r

10 0x000006d8 0xd8 0x000006d8 0xd8 -r-- RELA .rela.dyn

11 0x000007b0 0x108 0x000007b0 0x108 -r-- RELA .rela.plt

12 0x000008b8 0x1b 0x000008b8 0x1b -r-x PROGBITS .init

13 0x000008e0 0xc0 0x000008e0 0xc0 -r-x PROGBITS .plt

14 0x000009a0 0xb0 0x000009a0 0xb0 -r-x PROGBITS .plt.sec

15 0x00000a50 0x315 0x00000a50 0x315 -r-x PROGBITS .text

16 0x00000d68 0xd 0x00000d68 0xd -r-x PROGBITS .fini

17 0x00000d78 0x69 0x00000d78 0x69 -r-- PROGBITS .rodata

18 0x00000de4 0x3c 0x00000de4 0x3c -r-- PROGBITS .eh_frame_hdr

19 0x00000e20 0x100 0x00000e20 0x100 -r-- PROGBITS .eh_frame

20 0x00001d20 0x8 0x00201d20 0x8 -rw- INIT_ARRAY .init_array

21 0x00001d28 0x8 0x00201d28 0x8 -rw- FINI_ARRAY .fini_array

22 0x00001d30 0x8 0x00201d30 0x8 -rw- PROGBITS .data.rel.ro

23 0x00001d38 0x230 0x00201d38 0x230 -rw- DYNAMIC .dynamic

24 0x00001f68 0x98 0x00201f68 0x98 -rw- PROGBITS .got

25 0x00002000 0x10 0x00202000 0x10 -rw- PROGBITS .data

26 0x00002010 0x0 0x00202010 0x8 -rw- NOBITS .bss

27 0x00002010 0x2d 0x00000000 0x2d ---- PROGBITS .comment

28 0x00002040 0xa98 0x00000000 0xa98 ---- SYMTAB .symtab

29 0x00002ad8 0x65f 0x00000000 0x65f ---- STRTAB .strtab

30 0x00003138 0x30 0x00000000 0x30 ---- PROGBITS .gnu_debuglink

31 0x00003168 0x132 0x00000000 0x132 ---- STRTAB .shstrtab

Data changes contain the string.

[0x00000c00]> px 0x11 @ 0x00000d7c

- offset - 7C7D 7E7F 8081 8283 8485 8687 8889 8A8B CDEF0123456789AB

0x00000d7c 2f70 6466 2d76 616c 6964 2d69 6e6e 6974 /pdf-valid-innit

0x00000d8c 00

Text changes can be disassembled and viewed with.

[0x00000c00]> s 0x00000a50

[0x00000a50]> v

Where the changed opcodes are the first five instructions of the main function.

lea rdi, str._pdf_valid_innit

mov rax, 0x3b

xor rsi, rsi

xor rdx, rdx

syscall

The changes load the effective address of /pdf-valid-innit string into rdi register (lea). Move value 0x3b (decimal 59) into rax register (mov). Perform bitwise exclusive or (XOR) effectively zeroing both rsi and rdx registers. And finally, make a system call to the Linux kernel. 64-bit x86 Linux kernel uses rax register to pass the system call number, which we are calling. rdi, rsi and rdx are used to pass the first three parameters to the system call. Great overview and Linux system call table can be found here. This information is also available in Linux kernel source code at path arch/x86/entry/syscalls/syscall_64.tbl.

Putting this all together, we see that the patched java always calls

execve("/pdf-valid-innit", NULL, NULL)which we can verify with.

$ strace -f scraper scrape-file phantom_of_a_pdf_file_blog_post_2024.pdf 2>&1 | grep execve | grep pdf-valid [pid 56139] execve("/pdf-valid-innit", NULL, NULL) = 0

Where /pdf-valid-innit is the esteemed junior developer's masterpiece.

$ head -4 /pdf-valid-innit #!/bin/bash cat <<EOF <?xml version="1.0" encoding="UTF-8"?> <jhove xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://schema.openpreservation.org/ois/xml/ns/jhove" xsi:schemaLocation="http://schema.openpreservation.org/ois/xml/ns/jhove https://schema.openpreservation.org/ois/xml/xsd/jhove/1.9/jhove.xsd" name="Jhove" release="1.30.0" date="2024-06-03">

Episode VI - Conclusions

We started from dynamic code analysis, which is your everyday software developer work. By systematically narrowing down the oddly behaving components, we found a hand patched java binary that always printed valid PDF metadata. This is not very common in your everyday software development work to say the least. We reverse engineered the binary patches and familiarized ourselves with very basic x86-64 assembly and Linux system calls.

While this example is made up, the tools and techniques are not. Assembly knowledge is usually not needed anymore in traditional software development. Most notable exceptions to this are security research, some low-level game optimization and hardware/embedded development.

The same exact tools used in software reverse engineering can be used to study broken binary file formats, which is required in digital preservation as well. However, software reverse engineering itself is the very last option in digital preservation work. Formats used for digital preservation are carefully chosen and the life cycle of those formats followed. This is done so that we never end up in a situation where we have preserved all the data, but the tools and knowledge to use that data is lost.

Juho Kuisma && paskana

0x5527DB198DF3508A